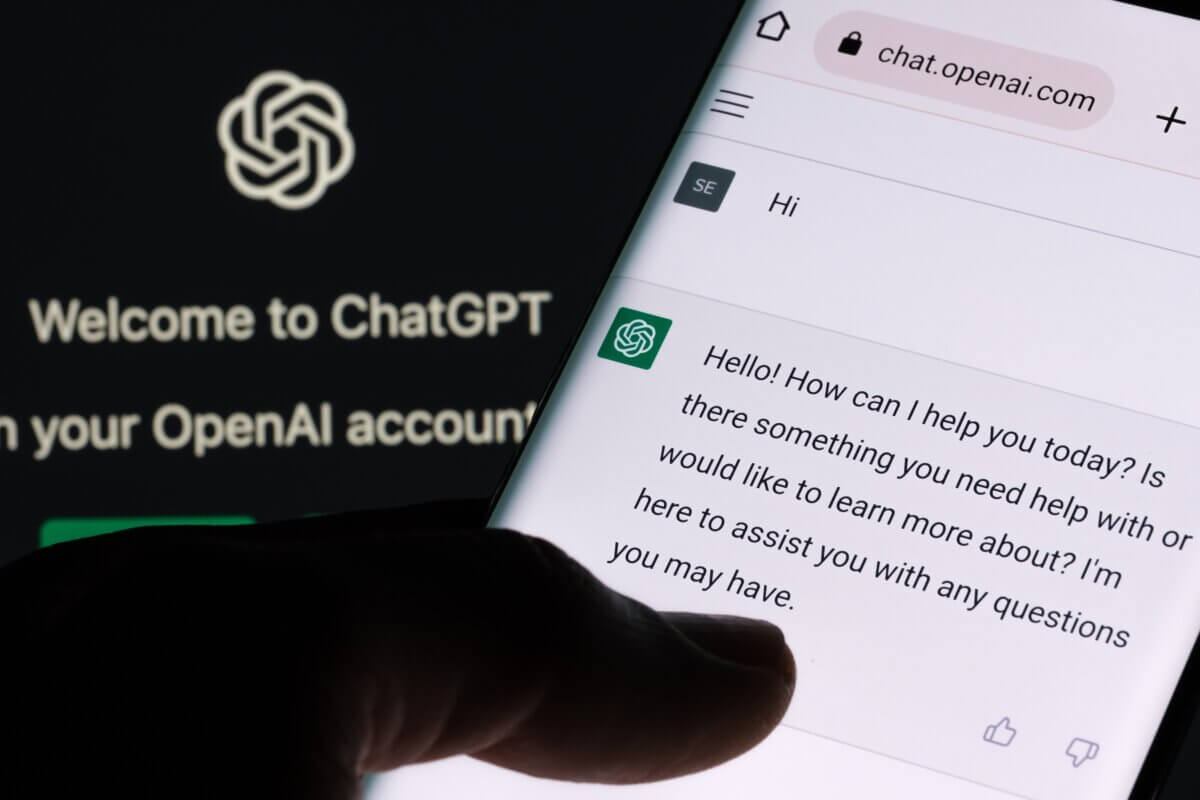

Artificial Intelligence (AI) (© NicoElNino - stock.adobe.com)

It’s been nearly two years since generative artificial intelligence was made widely available to the public. Some models showed great promise by passing academic and professional exams.

For instance, GPT-4 scored higher than 90% of the United States bar exam test takers. These successes led to concerns AI systems might also breeze through university-level assessments. However, my recent study paints a different picture, showing it isn’t quite the academic powerhouse some might think it is.

My study

To explore generative AI’s academic abilities, I looked at how it performed on an undergraduate criminal law final exam at the University of Wollongong – one of the core subjects students need to pass in their degrees. There were 225 students doing the exam.

The exam was for three hours and had two sections. The first asked students to evaluate a case study about criminal offenses – and the likelihood of a successful prosecution. The second included a short essay and a set of short-answer questions.

The test questions evaluated a mix of skills, including legal knowledge, critical thinking, and the ability to construct persuasive arguments.

Students were not allowed to use AI for their responses. And did the assessment in a supervised environment.

I used different AI models to create ten distinct answers to the exam questions.

Five papers were generated by just pasting the exam question into the AI tool without any prompts. For the other five, I gave detailed prompts and relevant legal content to see if that would improve the outcome.

I hand wrote the AI-generated answers in official exam booklets and used fake student names and numbers. These AI-generated answers were mixed with actual student exam answers and anonymously given to five tutors for grading.

Importantly, when marking, the tutors did not know AI had generated ten of the exam answers.

How did the AI papers perform?

When the tutors were interviewed after marking, none of them suspected any answers were AI-generated.

This shows the potential for AI to mimic student responses and educators’ inability to spot such papers.

But on the whole, the AI papers were not impressive.

While the AI did well in the essay-style question, it struggled with complex questions that required in-depth legal analysis.

This means even though AI can mimic human writing style, it lacks the nuanced understanding needed for complex legal reasoning.

The students’ exam average was 66%.

The AI papers that had no prompting, on average, only beat 4.3% of students. Two barely passed (the pass mark is 50%), and three failed.

In terms of the papers where prompts were used, on average, they beat 39.9% of students. Three of these papers weren’t impressive and received 50%, 51.7%, and 60%, but two did quite well. One scored 73.3%, and the other scored 78%.

What does this mean?

These findings have important implications for both education and professional standards.

Despite the hype, generative AI isn’t close to replacing humans in intellectually demanding tasks such as this law exam.

My study suggests AI should be viewed more like a tool, and when used properly, it can enhance human capabilities.

So schools and universities should concentrate on developing students’ skills to collaborate with AI and analyze its outputs critically rather than relying on the tools’ ability to simply spit out answers.

Further, to make collaboration between AI and students possible, we may have to rethink some of the traditional notions we have about education and assessment.

For example, we might consider when a student prompts, verifies and edits an AI-generated work, that is their original contribution and should still be viewed as a valuable part of learning.

Armin Alimardani is a lecturer in the School of Law at the University of Wollongong.

This article is republished from The Conversation under a Creative Commons license. Read the original article.

Despite not doing as well as the author had expected, in my opinion, they all still did incredible for being artificial intelligence lol!

“The AI papers that had no prompting, on average, only beat 4.3% of students. Two barely passed (the pass mark is 50%), and three failed.”

So doing NOTHING besides simple pasting the question into the box, 2 software passed a final college exam! 5 seconds worth of ‘work’ & boom you’ve passed a college law class final exam! INCREDIBLE in my opinion! Not sure why the author ISN’T impressed by this fact!

“In terms of the papers where prompts were used, on average, they beat 39.9% of students. Three of these papers weren’t impressive and received 50%, 51.7%, and 60%, but two did quite well. One scored 73.3%, and the other scored 78%.”

So where you take another minute of ‘work’ & paste into into prompts, your crush 40% of the other students scores??? & the author ISN’T highly impressed??? What??? It looks like they all passed with scores above 50%!

Then it’s left out that whomever is scoring these tests, it’s subjective. These scores might’ve done far better (or worse) with other eyeballs on them. I’d venture to guess that the scores would still pass & be better with other eyes on them because you’d NOT fail that test if it’s enough to pass it & contains what’s needed to pass. Therefore the score might possibly be even higher, or way higher, if you tested this scenario with other people scoring this college class final law exam.

The author ISN’T impressed but I sure am! This will only improve over time & they’re mostly passing right now.